Page 101 - 《软件学报》2024年第4期

P. 101

周植 等: 面向开集识别的稳健测试时适应方法 1679

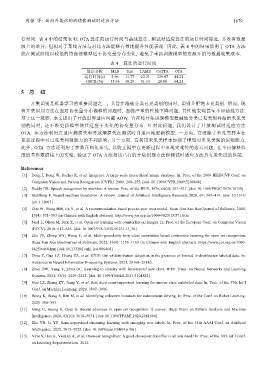

行时间. 表 4 中的结果表明: OTA 算法的运行时间与基线算法、测试时适应算法的运行时间接近, 并没有数量

级上的差异; 但相对于基线方法与对比方法能够有效地提升性能表现. 因此, 表 4 中的结果说明了 OTA 方法

能在测试阶段以较低的资源使模型适于协变量分布变化, 避免了重新训练模型的资源开销与数据收集成本.

表 4 算法的运行时间

算法名称 MLS Tent LAME CoTTA OTA

运行时间(s) 4.96 11.77 22.21 329.07 44.21

OSCR (%) 55.96 30.29 51.33 28.00 64.21

5 总 结

开集识别是机器学习的重要问题之一, 其旨在准确分类已见类别的同时, 识别并拒绝未见类别. 然而, 现

有开集识别方法在面对协变量分布偏移的问题时, 面临严重的性能下降问题, 其性能表现甚至不如基线方法.

基于这一观察, 本文提出了开放世界适应问题 AOW, 旨在使开集识别模型稳健地分类已见类别并拒绝未见类

别的同时, 还不断更新模型使其适应于变化的协变量分布. 针对此问题, 我们设计了开放测试时适应方法

OTA. 该方法利用自适应熵损失和开放熵损失在测试时自适应地更新模型. 一方面, 它消除了未见类样本在

更新过程中对已见类判别能力的不利影响; 另一方面, 它利用未见类样本加强了模型对未见类别的识别能力.

此外, OTA 方法还利用了参数正则化损失, 以防止模型在更新过程中出现灾难性的遗忘问题. 在不同偏移程

度的基准数据集上的实验, 验证了 OTA 方法相比已有的开集识别方法和测试时适应方法具有更先进的性能.

References:

[1] Deng J, Dong W, Socher R, et al. Imagenet: A large-scale hierarchical image database. In: Proc. of the 2009 IEEE/CVF Conf. on

Computer Vision and Pattern Recognition (CVPR). 2009. 248−255. [doi: 10.1109/CVPR.2009.5206848]

[2] Reddy DR. Speech recognition by machine: A review. Proc. of the IEEE, 1976, 64(4): 501−531. [doi: 10.1109/PROC.1976.10158]

[3] Stahlberg F. Neural machine translation: A review. Journal of Artificial Intelligence Research, 2020, 69: 343−418. [doi: 10.1613/

jair.1.12007]

[4] Dou W, Wang HM, Jia Y, et al. A recommendation-based peer-to-peer trust model. Ruan Jian Xue Bao/Journal of Software, 2004,

15(4): 571−583 (in Chinese with English abstract). http://www.jos.org.cn/1000-9825/15/571.htm

[5] Neal L, Olson M, Fern X, et al. Open set learning with counterfactual images. In: Proc. of the European Conf. on Computer Vision

(ECCV). 2018. 613−628. [doi: 10.1007/978-3-030-01231-1\_38]

[6] Zhu PF, Zhang WY, Wang Y, et al. Multi-granularity inter-class correlation based contrastive learning for open set recognition.

Ruan Jian Xue Bao/Journal of Software, 2022, 33(4): 1156−1169 (in Chinese with English abstract). https://www.jos.org.cn/1000-

9825/6468.htm [doi: 10.13328/j.cnki. jos.006468]

[7] Zhou Z, Guo LZ, Cheng ZZ, et al. STEP: Out-of-distribution detection in the presence of limited in-distribution labeled data. In:

Advances in Neural Information Processing Systems. 2021. 29168−29180.

[8] Zhou DW, Yang Y, Zhan DC. Learning to classify with incremental new class. IEEE Trans. on Neural Networks and Learning

Systems, 2021, 33(6): 2429−2443. [doi: 10.1109/TNNLS.2021.3104882]

[9] Guo LZ, Zhang ZY, Jiang Y, et al. Safe deep semi-supervised learning for unseen-class unlabeled data. In: Proc. of the 37th Int’l

Conf. on Machine Learning. 2020. 3897−3906.

[10] Wong K, Wang S, Ren M, et al. Identifying unknown instances for autonomous driving. In: Proc. of the Conf. on Robot Learning.

2020. 384−393.

[11] Geng C, Huang S, Chen S. Recent advances in open set recognition: A survey. IEEE Trans on Pattern Analysis and Machine

Intelligence, 2020, 43(10): 3614−3631. [doi: 10.1109/TPAMI.2020.2981604]

[12] Zhu YN, Li YF. Semi-supervised streaming learning with emerging new labels. In: Proc. of the 34th AAAI Conf. on Artificial

Intelligence. 2020. 7015−7022. [doi: 10.1609/aaai.v34i04.6186]

[13] Vaze S, Han K, Vedaldi A, et al. Open-set recognition: A good closed-set classifier is all you need? In: Proc. of the 10th Int’l Conf.

on Learning Representations. 2022.