Page 369 - 《软件学报》2021年第12期

P. 369

张云鹏 等:邻中心迭代策略的单标注视频行人重识别 4033

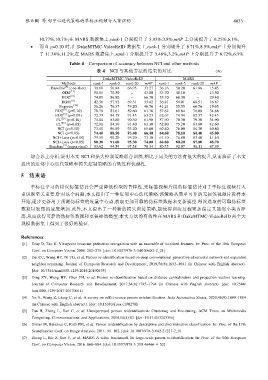

10.77%,10.7%;在 MARS 数据集上,rank-1 分别提升了 3.93%,3.8%,mAP 上分别提升了 6.25%,6.1%;

• 而当 p=0.10 时,在 DukeMTMC-VideoReID 数据集上,rank-1 分别提升了 8.71%,8.5%,mAP 上分别提升

了 11.34%,11.2%;在 MARS 数据集上,rank-1 分别提升了 3.48%,3.2%,mAP 上分别提升了 6.72%,6.5%.

Table 4 Comparison of accuracy between NCI and other methods

表 4 NCI 与其他方法的结果的对比 (%)

DukeMTMC-VideoReID MARS

Methods rank-1 rank-5 rank-20 mAP rank-1 rank-5 rank-20 mAP

Baseline [9] (one-shot) 39.60 56.84 66.95 33.27 36.16 50.20 61.86 15.45

OIM [13] 51.10 70.50 − 43.80 33.70 48.10 − 13.50

BUC [19] 74.80 86.80 − 66.70 55.10 68.30 − 29.40

DGM [11] 42.36 57.92 69.31 33.62 36.81 54.01 68.51 16.87

Stepwise [10] 56.26 76.37 79.20 46.76 41.21 55.55 66.76 19.65

[9]

EUG (p=0.10) 70.79 83.61 89.60 61.76 57.62 69.64 78.08 34.68

[9]

EUG (p=0.05) 72.79 84.18 91.45 63.23 62.67 74.94 82.57 42.45

PL [25] (p=0.10) 71.00 83.80 90.30 61.90 57.90 70.30 79.30 34.90

PL [25] (p=0.05) 72.90 84.30 91.40 63.30 62.80 75.20 83.80 42.60

NCI (p=0.10) 73.40 86.80 93.20 65.60 60.40 76.00 84.30 40.80

NCI (p=0.05) 74.40 88.50 93.40 66.40 64.60 78.10 84.40 45.80

NCI+Loss (p=0.10) 79.50 90.20 95.20 73.10 61.10 76.80 83.40 41.40

NCI+Loss (p=0.05) 80.30 91.60 95.30 74.00 66.60 80.20 87.80 48.70

[9]

Baseline (supervised) 83.62 94.59 97.58 78.34 80.75 92.07 96.11 67.39

综合以上分析,说明本文 NCI 和损失控制策略联合训练,相比于同类的方法有很大的提升,从而验证了本文

提出的近邻中心迭代策略和损失控制策略的有效性和优越性.

5 结束语

单标注学习的错误标签估计会严重降低模型的鲁棒性,无标签视频片段的标签估计对于单标注视频行人

重识别至关重要.针对这个问题,本文提出了一种近邻中心迭代策略.该策略从简单可靠的无标签视频片段样本

开始,逐步更新用于预测伪标签的度量中心点,获取更加可靠的伪标签数据来更新模型.每次选取的可靠伪标签

数据以较慢的速度增加.此外,本文提出了一种新的损失训练策略,能使得训练过程更加稳定又能缩小类内距

离,从而获得可靠的伪标签数据和更鲁棒的模型.本文方法的有效性在 MARS 和 DukeMTMC-VideoReID 两个大

规模数据集上得到了很好的验证.

References:

[1] Gray D, Tao H. Viewpoint invariant pedestrian recognition with an ensemble of localized features. In: Proc. of the 10th European

Conf. on Computer Vision. 2008. 262−275. [doi: 10.1007/978-3-540-88682-2_21]

[2] Dai CC, Wang HY, Ni TG, et al. Person re-identification based on deep convolutional generative adversarial network and expanded

neighbor reranking. Journal of Computer Research and Development, 2019,56(8):1632−1641 (in Chinese with English abstract).

[doi: 10.7544/issn1000-1239.2019.20190195]

[3] Ding ZY, Wang HY, Chen FH, et al. Person re-identification based on distance centralization and projection vectors learning.

Journal of Computer Research and Development, 2017,54(8):1785−1794 (in Chinese with English abstract). [doi: 10.7544/

issn1000-1239.2017.20170014]

[4] Ye Y, Wang Z, Liang C, et al. A survey on mlllti-source person re-identification. Acta Automatica Sinica, 2020,46(9):1869−1884

(in Chinese with English abstract). [doi: 10.16383/j.aas.c190278]

[5] Fan H, Zheng L, Yan C, et al. Unsupervised person reidentification: Clustering and fine-tuning. ACM Trans. on Multimedia

Computing, Communications, and Applications, 2018,14(4):83. [doi: 10.1145/3243316]

[6] Hirzer M, Beleznai C, Roth PM, et al. Person reidentification by descriptive and discriminative classification. In: Proc. of the 17th

Scandinavian Conf. on Image Analysis. 2011. 91−102. [doi: 10.1007/978-3-642-21227-7_9]

[7] Zheng L, Bie Z, Sun Y, et al. MARS: A video benchmark for large-scale person re-identification. In: Proc. of the 14th European

Conf. on Computer Vision. 2016. 868−884. [doi: 10.1007/978-3-319-46466-4_52]